Computing “at the edge”: bringing artificial intelligence everywhere

By José Luis Espinosa, Chief Engineer/Manager – Ubotica Technologies and Associate Professor at ESI-UCLM.

How do we get to today's artificial intelligence?

The recent rise of artificial intelligence is undeniable, to the point that it has made a place for itself in the press and in general ideology. It is difficult today not to have heard about its new applications, including its integration in the autonomous car or robots, assistance in medical diagnosis or other generative systems such as CatGPT o from·E.

What may seem surprising is that the basic concepts of these systems date back many years. Neural networks have their first origins in 1943 [1]. However, it is in the last two decades that the necessary conditions have been met for the boom in the development of artificial intelligence systems [2], thanks to:

1) The evolution in processing hardware, through the use of graphics cards (GPUs), covering the great computational demand that these systems have to learn.

2) Big data, providing the necessary amount of data required by this type of techniques.

3) The evolution of software and deep learning techniques.

“Artificial intelligence”at the edge"

The evolution of artificial intelligence techniques has led to the development of increasingly powerful but also more complex intelligent systems, making it necessary to use high-performance systems or cloud computing resources. But what happens when we have limitations that prevent us from using these resources to integrate our intelligent system? A clear example would be the main area in which we work in the company ubotica, where it is about provide autonomy and intelligence to systems deployed in space, which would currently be “the last limit” for human beings.

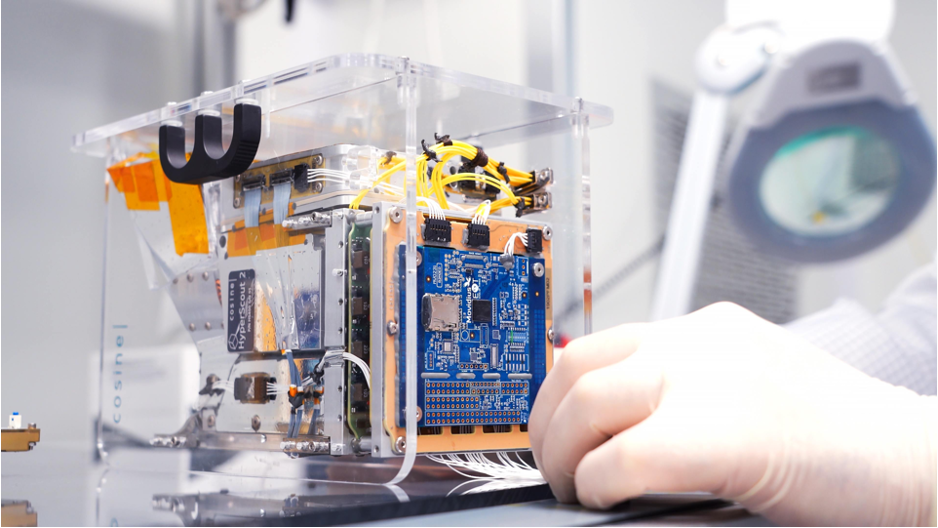

One of the cases in which these restrictions are even more drastic are nanosatellites. These small satellites are booming thanks to the investment of different space agencies such as the ESA or the NASA and companies like SpaceX due to their relatively low cost and the possibilities they offer. Even so, these devices do not have the same communication capabilities with the Earth as a larger satellite.

It is for these cases where computing arises “at the edge", moving the execution of artificial intelligence algorithms directly to the place where the information is captured. This approach has several advantages:

1) Privacy. By performing operations in the place where the data is captured, it is not necessary to send information as sensitive as images over the network.

2) Reduced latency. By not having to send this data and wait to receive the results, the device itself would be able to make a decision in a short period of time. For example, if a nanosatellite or rover detected an obstacle, it could autonomously decide the best course of action to avoid it.

3) Reduction of required bandwidth. This is especially notable for the use case of nanosatellites for Earth observation. These devices capture large images (several GiB), but have a very low speed of sending information to Earth, which makes the constant sending of information for processing at a terrestrial base unfeasible. Thanks to computingat the edge”, it would be simple to send only the information obtained when processing the images, such as, for example, the notification of an emergency due to the detection of a fire or any other type of dangerous event.

What is necessary at a technological level?

In order to apply this paradigm, two factors are necessary:

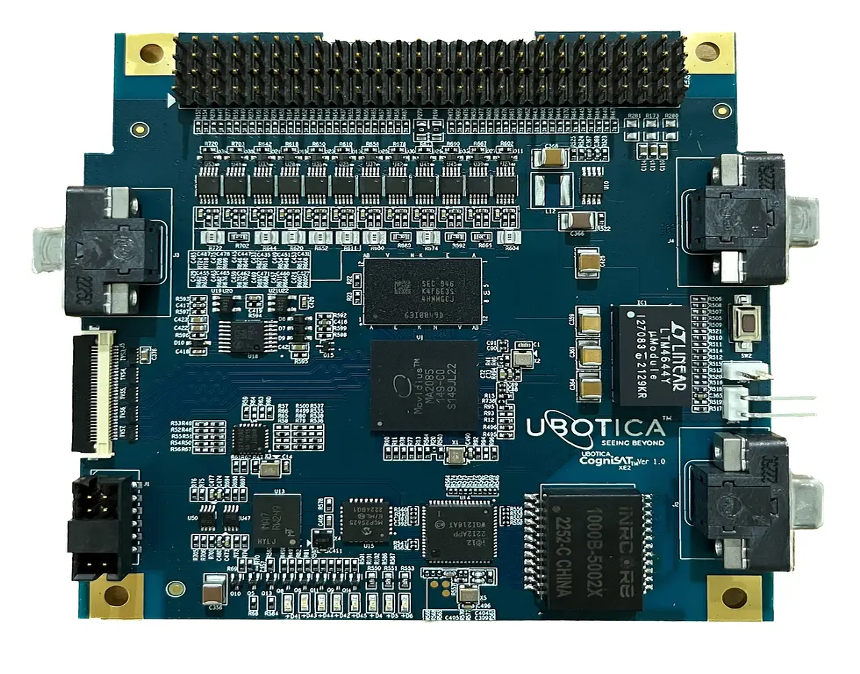

1) El development of specific embedded systems for the execution of artificial intelligence applications. Although these systems must have as their main characteristic a high computing power, it is also necessary that they have a size reduced or with a low energy consumption, to be sent into space. This is the case of our XE2 board, based on the Myriad devices developed by Intel Movidius, and which we use at Ubotica in all types of developments and solutions related to the aerospace sector and industry 4.0, but which have also been used in other very restrictive situations such as detection of poachers in protected areas.

2) to use of specific techniques that allow us to use artificial intelligence systems within this hardware since, although the computing capacity is high, it is difficult to obtain the same performance as a GPU due to size and consumption restrictions. That is why on many occasions reduced versions of the models used in more complex systems are used or a reduction in the precision of the operations is made by using fewer bits to store floating point values (for example, using FP16 instead of FP32) or the use of quantization techniques that reduce the number of possible values to a small set (even to integer values).

What do I need to work in this context?

As we have seen, artificial intelligence “at the edge” is booming, having become a very particular job niche. Although it is possible to enter it with knowledge only in the field of hardware or artificial intelligence, those of us who are working on it have had to convert to a much more multidisciplinary profile.

In my particular case, with an initial specialization in the section of artificial intelligence, it has been necessary for me to learn to take hardware restrictions into account when designing and training intelligent systems. Other colleagues focused on hardware have had to learn to understand the operation of intelligent systems to be able to integrate and validate them within the devices.

This clashes with the division into intensifications of the current university degrees at the European level, in which specialization in a specific branch is prioritized, so the additional training necessary is often borne by the student himself or the company that provides it. hires through courses, internal training or master's degrees of a specific or general nature such as MUII of the ESI.

Therefore, I would like to end this article with a reflection that I always make to both my co-workers and my students, both because of a possible multidisciplinarity and because of the constant appearance of new technologies, and that is that One of the main abilities that a computer science graduate must have is being able to understand and learn new things..

References

[1] McCulloch, W.S., & Pitts, W. (1943). A logical calculus of the ideas immanent in nervous activity. The bulletin of mathematical biophysics, 5, 115-133. [2] Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT press.Read also Professor and graduates ESI winners NASA Awards 2022