Measuring the intensity of hate messages on the internet

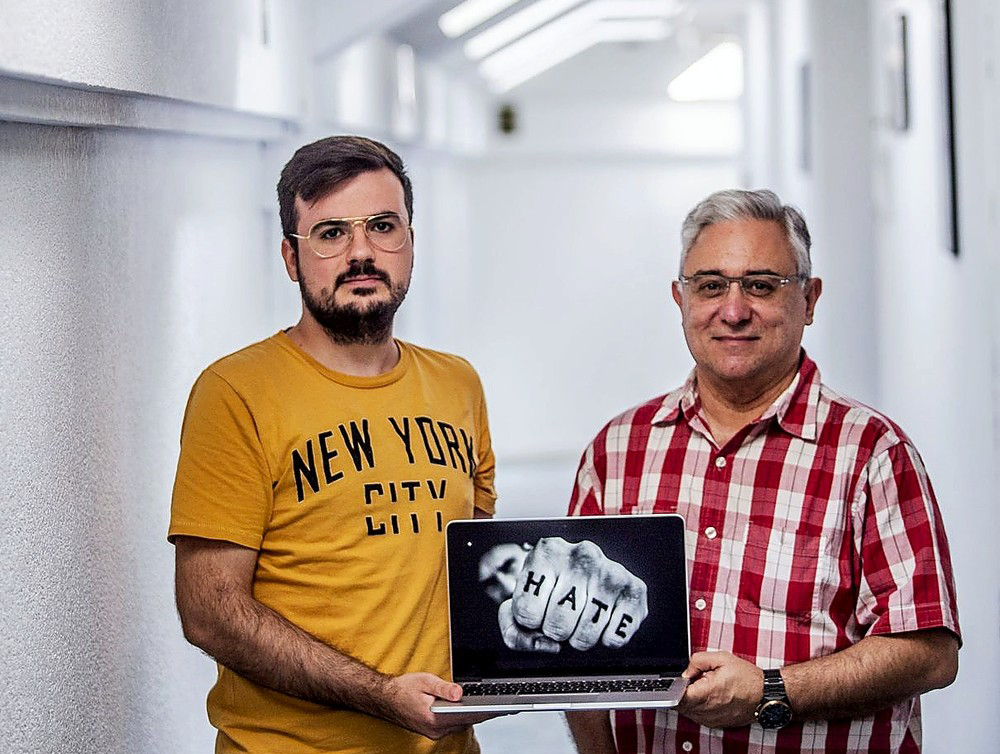

ESI graduate Andrés Montoro, together with professors Adán Nieto and José Ángel Olivas, create an initiative that can find the 'limit' of comments on the network

After the jihadist attacks that took place in Europe, the networks picked up messages with xenophobic content towards the Arab world. At that time, the UCLM Computer Engineering student Andrés Montoro thought about how the intensity of that hatred could be measured, for which he created software that would assess the content. His work earned him enrollment as a final degree project, with the support of UCLM professors Adán Nieto, of Law, and José Ángel Olivas, of Computer Science and member of the Smile research group, and can become a research project. on a large scale, for which funding has been requested from the regional government.

"Hate crimes are a pretty hot topic with the control over what is published on the internet," Montoro explained. Until now, the review of messages could be done in a human way, but the number is increasing and that is where artificial intelligence is necessary to review the content, screen and highlight to the owners where there may be a possible crime. For example, in Germany, the media, including social networks, are already given 24 hours to remove content that incites hatred.

The beginning of an application of this type starts from its learning, which was done through an experiment launched to the law students of the faculty from which the ontology of the domain was extracted, a series of related terms that have to do with this case with hate. Thus, an application similar to the existing ones was achieved, which is based on syntax and semantics. “We use these natural language processing techniques for message detection, which we then subsequently classify according to their intensity using sentiment analysis and fuzzy logic.” To this element was added the use of article 510 of the Penal Code, the Rabat action plan and the expert's own knowledge to build a taxonomy, a map of knowledge.

"It is a model to detect the intensity of hate speech with a series of variables that affect the message," summarizes Montoro. In this sense, for each piece of writing, it is analyzed whether, in addition to hatred, violence is incited by asking for action against a group or if there is a climate in which these messages may have greater or lesser impact. In addition, the sender and the dissemination, the shared messages, are key. By adding the content with the metadata, "an artificial intelligence system" is created that works with fuzzy logic, one in which there are no absolutes, and that allows a communication to be analyzed to know "to what degree a message is potentially criminal," he explained. olives.

The work of the graduate and the professors can become, if it ends up being approved, in a wide-ranging research project with several doctors involved in creating a key technology for the future of social networks. It must be taken into account that software of this type would serve both to monitor the web and screen hate messages, as well as to make a legal assessment of these communications and know what is and what is not hate. It would allow knowing the 'limits' of hate, because as with humor, there is also a border between what is an opinion and what is a crime. "In criminal law, hate speech is very ambiguous because of freedom of expression, jurists have a hard time agreeing, but we have provided a measurable way to identify hate."

Source: The grandstand of Ciudad Real (Hilario L. Munoz)